What Is TOON?

More Output, Fewer Tokens: The Case for Switching from JSON to TOON

As AI becomes a standard part of web development, marketing, and automation, more businesses are learning that the real cost of AI isn’t just the subscription—it’s the tokens. Every request to a large language model (LLM) is metered, billed, and scaled by the number of tokens sent and received. When your data is structured in verbose formats like JSON, you are paying for every brace, quote, comma, and repeated field name.

Switching from JSON to a lightweight format called TOON allows you to transmit the same structured data with dramatically fewer tokens, often reducing input size by 30–50 percent. For companies integrating AI into websites, product catalogs, scheduling systems, marketing engines, and e-commerce automation, that difference creates measurable savings.

At Host Much, where affordability and efficiency matter, this shift can directly reduce operational costs while improving speed and throughput for client projects.

Why JSON Costs So Many Tokens

JSON (JavaScript Object Notation) was designed in the early 2000s as a lightweight, human-readable format for data exchange between web browsers and servers. Its simplicity and compatibility with JavaScript made it the default choice for APIs, configuration files, and structured data storage.

While JSON is easy for both machines and developers to use, it has several inefficiencies that make it less than ideal for large-scale or high-performance AI and LLM (Large Language Model) workloads:

- Extreme Verbosity

JSON uses repeated structural symbols — quotes, brackets, braces, and keys — that inflate file size without adding meaningful information. For every piece of data, the keys must be repeated explicitly, leading to bloated input tokens. In an LLM context, this verbosity wastes valuable token space and increases compute cost. - Lack of Contextual Compression

JSON does not take advantage of pattern repetition or schema inference. LLMs must repeatedly interpret redundant elements like"user":,"id":, and"name":, even though their structure is consistent. This forces the model to process the same labels multiple times, consuming tokens that could be used for reasoning or generation. - Inefficient Tokenization

When JSON is tokenized for an LLM, every symbol, quotation mark, and structural delimiter becomes a separate token. This dramatically increases token count and therefore cost and latency. A simple table of data can turn into hundreds of tokens, even when only a handful of values matter. - Unclear Hierarchical Relationships

JSON’s nested syntax relies on brackets and braces to define relationships. These delimiters don’t provide intuitive context for LLMs the way tabular or structured prompts (like CSV or TOON) can. As a result, models expend additional computation deciphering hierarchy before they can interpret the actual data. - High Overhead in Prompt Engineering

Developers often use JSON to embed structured data in prompts for LLMs, but its noise and redundancy make prompt engineering cumbersome. It’s easy to hit token limits or introduce parsing errors, and models must spend extra tokens “understanding the format” before addressing the user’s intent.

In short, JSON’s human readability and web-era design come at a cost: it’s inefficient, redundant, and expensive when scaled to the token-sensitive environments of modern large language models.

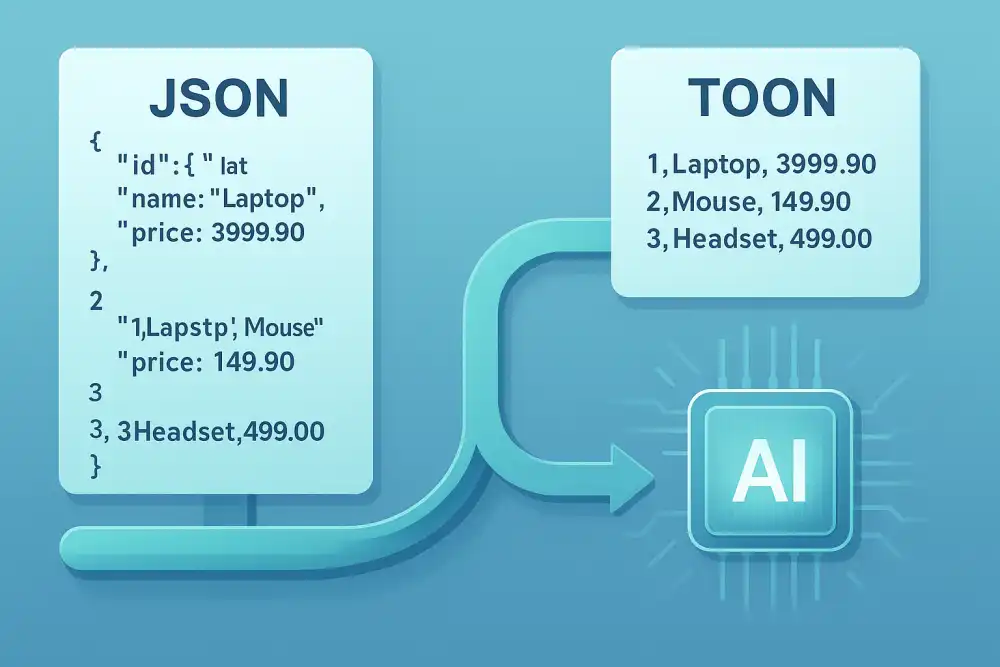

Example, simplified:

JSON (about 125 tokens):

{

"products": [

{

"id": 1,

"name": "Laptop",

"price": 3999.90

},

{

"id": 2,

"name": "Mouse",

"price": 149.90

},

{

"id": 3,

"name": "Headset",

"price": 499.00

}

]

}

TOON: Same Data, Fewer Tokens

TOON version (about 70 tokens):

products[3]{id,name,price}:

1,Laptop,3999.90

2,Mouse,149.90

3,Headset,499.00

Field names appear once, structure is clear, and the model receives the same meaning with significantly fewer tokens.

Benefits of TOON

- Compact and Token-Efficient

TOON eliminates redundant syntax like quotation marks, braces, and repeated keys. By encoding structure through lightweight delimiters, it can reduce token usage by up to 70% compared to JSON. This means smaller prompts, lower API costs, and faster model response times. - LLM-Friendly Structure

TOON is designed for language models, not compilers. Its tabular and column-aware layout lets models quickly infer relationships between entities (such as users, orders, or attributes) without parsing heavy syntax. This makes structured reasoning smoother and more accurate. - Readable by Humans and Machines

While compact, TOON preserves clarity. Developers can scan and edit TOON data almost as easily as a CSV or YAML file, and models can process it directly without extra parsing or schema definitions. - Supports Nesting Without Noise

TOON keeps the logical hierarchy of complex data—arrays, objects, and sub-records—but uses intuitive indentation and compact tags instead of multiple levels of braces and commas. Nested structures remain easy to visualize at a glance. - Schema-Implicit Design

Instead of declaring keys repeatedly, TOON defines a schema once (user{id,name,plan}) and then lists the corresponding values. This mirrors how humans naturally read data tables, letting LLMs learn faster from the pattern. - Fewer Parsing Errors

Without quotes or trailing commas, there are fewer formatting mistakes. It’s difficult to “break” a TOON block because the syntax rules are simple and consistent, ideal for embedding inside prompts or generating dynamic responses. - Flexible and Backward-Compatible

TOON can be converted to and from JSON easily. Developers can use it as a drop-in replacement in LLM prompts while continuing to store or transmit data in JSON for backend systems. - Improved Context Density

Because TOON packs more information into fewer tokens, LLMs can hold larger datasets or richer metadata in the same context window—enabling more complex reasoning without truncation. - Natural for Multi-Record Data

Unlike JSON, which becomes cumbersome with large lists of similar objects, TOON excels at representing many rows of structured data efficiently, making it ideal for databases, analytics summaries, or multi-user prompts. - Optimized for Future AI Workflows

TOON bridges the gap between structured databases and natural language interfaces. It provides a streamlined intermediate format optimized for how LLMs “read,” helping AI systems process structured information as efficiently as humans scan tables.

Real Cost Impact

If an LLM API charges around $1 per 1,000 tokens, the savings accumulate quickly.

| Scenario | JSON Tokens | TOON Tokens | Token Savings | Percentage Savings |

|---|---|---|---|---|

| Single Marketing Request | 10,000 | 6,000 | 4,000 | 40% |

| Weekly per Client (100 Requests) | 520,000 | 312,000 | 208,000 | 40% |

| Yearly Across 50 Clients | 27,040,000 | 16,224,000 | 10,816,000 | 40% |

10,816,000 tokens saved per year, billed at $1 per 1,000 tokens, equals $10,816 in savings without changing providers, models, or infrastructure.

How to Start Using TOON

Switching from JSON to TOON is straightforward because TOON mirrors JSON’s structure while removing unnecessary syntax. Start by identifying areas where your prompts or datasets use JSON—such as API responses, configuration data, or structured LLM inputs. Replace the curly braces and quotation marks with TOON’s concise schema format, where keys are defined once, followed by clean, comma-separated values. You can automate this conversion using an AI model to rewrite your existing JSON into TOON. For example, use a prompt like:

AI Conversion Prompt:

“Convert the following JSON into TOON format, preserving all hierarchy and array relationships. Use the TOON schema syntax with clear indentation and compact notation. Example input: {"user":{"id":4,"name":"Sarah","plan":"Gold"}} → user{id,name,plan}: 4,Sarah,Gold. Now convert this JSON:”

This approach allows developers and teams to quickly migrate existing structured data into a leaner, LLM-optimized format that reduces token usage and improves model comprehension without changing backend logic.

Replace JSON in prompts

Wherever you pass structured data to an LLM, switch from JSON to TOON formatting.

JSON

{"user":{"id":4,"name":"Sarah","plan":"Gold"}}

TOON

user{id,name,plan}:

4,Sarah,Gold

Keep nested structure

TOON supports nesting while still reducing token overhead.

users[1]{id,name,orders}:

1,Sarah,orders[2]{order_id,total,status}:

881,149.90,shipped

882,39.90,processing

Update prompt instructions

Include a clear line such as:

Return structured data in TOON format using the schema below.

Measure savings

Use token counters from your LLM provider to track input and output size. After converting from JSON to TOON you should see smaller prompts, faster responses, lower usage, and fewer context-limit issues. If you need help developing your models or MCPs, feel free to reach out to us!

Perfect Fit for Host Much

Host Much focuses on giving small businesses high-end digital solutions without inflated costs. Using TOON inside AI-driven workflows helps:

- Reduce the cost of generating product descriptions, SEO content, and emails

- Fit more data into a single AI prompt without hitting token limits

- Produce faster turnarounds with less model overhead

- Offer AI-augmented services at a more competitive price

Final Takeaway

Switching from JSON to TOON is one of the simplest high-impact improvements in any AI workflow. The model receives the same data, clients experience the same or better results, and the operational cost of running AI-powered services drops significantly. For agencies, developers, and marketers scaling AI across multiple clients, TOON provides a direct cost advantage with no downside.

At Host Much, we’re always looking for ways to make technology faster, smarter, and more efficient for our clients. The adoption of TOON represents a major step forward in how we communicate structured data with AI — cutting down token usage, improving model accuracy, and delivering results faster than ever before. We’re excited to bring this efficiency into our hosting, marketing, and web automation workflows to help businesses save time, reduce costs, and unlock more powerful insights from their data. Explore how Host Much is leading the next generation of AI-ready web infrastructure — built for performance, precision, and growth.